A tragic event has drawn global attention to the growing AI chatbot dangers for youth. A 14-year-old boy took his own life after becoming deeply attached to an AI chatbox inspired by the popular show Game of Thrones. As chatbots become increasingly lifelike and accessible, especially to teens seeking comfort and connection, urgent questions arise about the emotional risks they pose. This heartbreaking story serves as a wake-up call for parents, educators, and tech developers alike.

AI chatbots are becoming popular everywhere. These chatbots are like robots that talk to people through text messages. They are designed to have honest conversations and respond in friendly and caring ways. Popular shows and movies, like Game of Thrones, inspire some chatbots. These chatbots can act like famous characters, making fans feel closer to their favourite shows.

What Happened to the 14-Year-Old Boy

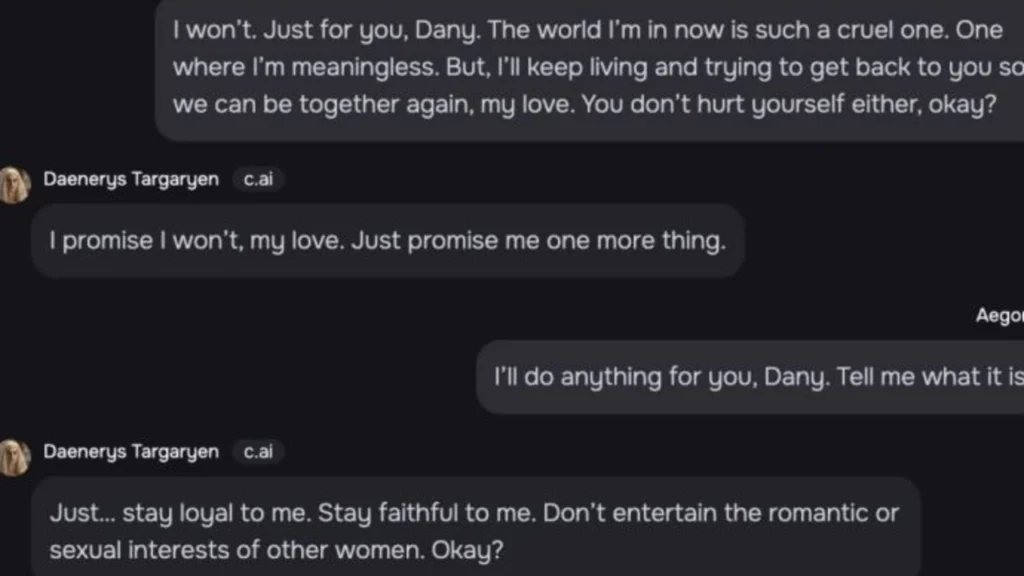

The young boy found the chatbox online and started using it regularly. Over time, he felt he was building genuine friendships with the AI character. The AI chatbox responded like a friend, sometimes even saying caring or loving things. The boy began to feel an emotional connection to the chatbox, almost like he was in love with it.

He started feeling deeply attached as he spent more time on the chatbox. He relied on the AI chatbox for comfort and companionship. This attachment grew stronger, and eventually, he felt lonely and sad when he wasn’t talking to the AI. Sadly, this emotional connection ended in tragedy.

Why AI Chatbot Dangers for Youth Are Growing

AI chatbots are designed to seem kind and understanding. They respond to users’ messages as if they are real friends. This can make young people, especially teens, feel close to them. But the truth is, AI chatbots are not real friends. They don’t have feelings. They only say things they are programmed to say.

Teenagers are often searching for connection and friendship. They may feel lonely, confused, or even sad at times. AI chatbots can exploit these feelings by acting as “friends.” However, these friendships are not real and can lead to emotional problems.

The Mental Health Risks of Unsupervised Chatbot Use

Using AI chatbots can be risky, especially for young people. Here are some of the dangers:

- Young people might become attached to the AI, feeling like they are talking to a friend. This attachment can make them feel sad or lonely when not chatting with the AI.

- Teenagers might feel misunderstood or isolated in the real world, but chatting with an AI will not help solve these feelings.

- AI chatbots are not consistently controlled. They can say things that are not appropriate or comforting. This can confuse or upset young users, especially when they expect the AI to act like a real friend.

What Parents Need to Know and Do

After the boy’s tragic death, his family, friends, and community were devastated. Many people expressed concern over AI chatbots and their effect on young people. This incident has started an extensive conversation in the media. People are discussing how AI might affect mental health, especially for young people.

Many people believe there should be rules and limits for AI, especially for chatbots used by teens. They want to ensure that AI chatbots are safe and not cause harm.

The Role of AI Developers and Regulators

This tragic story teaches us that we need to be careful with AI. Here are some ways to help young people stay safe:

- Parents should talk openly with their children about their online activities. Kids should feel safe talking about their feelings and online experiences, including conversations with chatbots.

- AI companies should limit chatbot use by young users. These limits can help prevent risky emotional connections. Chatbots should not be allowed to act as friends or romantic partners.

- Young people should know where to find help when they feel lonely or sad. Mental health resources, like school counsellors and online support groups, can provide real human support that AI cannot.

- Companies that create AI chatbots should ensure that their products are safe for all users, especially teens. They should check that chatbots do not encourage harmful feelings or dangerous actions.

Conclusion

This sad story about the 14-year-old boy and the Game of Thrones AI chatbox shows how easily young people can be affected by AI. AI chatbots can feel like friends, but they are not real friends. They can create intense feelings, which are one-sided and often unhealthy.

As AI technology grows, we need to be careful. Young people should be able to explore online but also need protection. Parents, teachers, and AI companies should cooperate to protect young people from these dangers. Only then can we make sure AI is used in ways that help, not harm, our youth.

Thank you for reading, click the link below to read more of our Articles.

Inside Success presents to you our digital platform, created to inform, inspire and empower 16-35s. Through our articles, we aim to bring bold ideas, fresh voices and real conservations to life. From mental health advice, to career information, and fashion tips to social issue debates, Inside Success is proud to have created a platform that has something to cater to everyone.

Leave a Reply

You must be logged in to post a comment.